Context and background

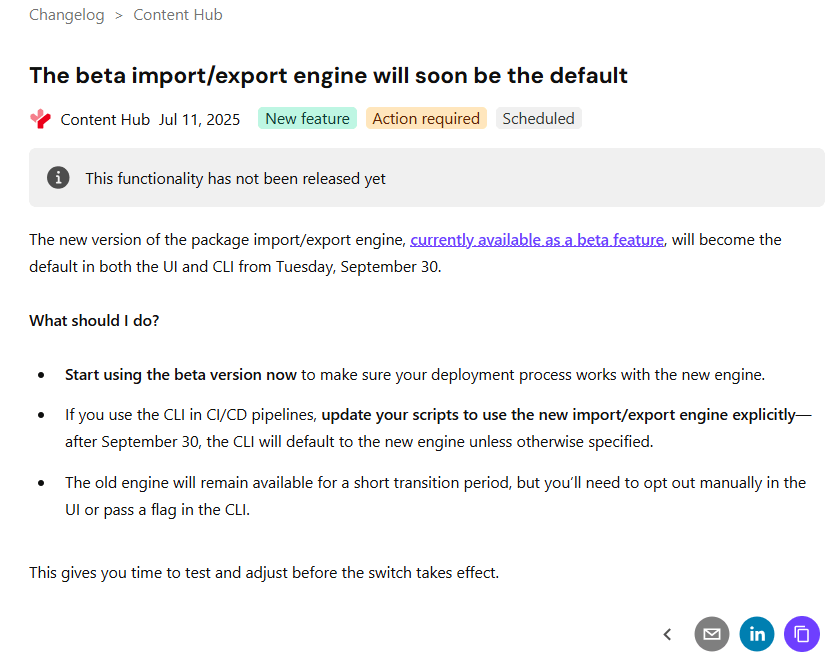

If you already using DevOps for deployments with your Content Hub environments, then you probably already aware of the breaking change that Sitecore introduced a few months ago. You can read the full notification on the Sitecore Support page The new version of the package import/export engine become the default in both the UI and CLI from Tuesday, September 30 according to the notification. Because of the breaking changes introduced, this means existing CICD pipelines won’t work. In fact, there is a high risk of breaking your environments if you try use existing CICD pipelines without refactoring.

In this blog post, I will look into details what breaking changes were introduced and how to re-align your existing CICD pipelines to work with the new import/export engine.

So what has changed in the new Import/Export engine?

Below is a screenshot from the official Sitecore docs summarizing the change. You can also access the change log here.

There is no further details available from the docs on specifics of the breaking change. However, it is very straightforward to figure out that Sitecore fundamentally changed the package architecture in the new import/export engine.

Resources are grouped by type

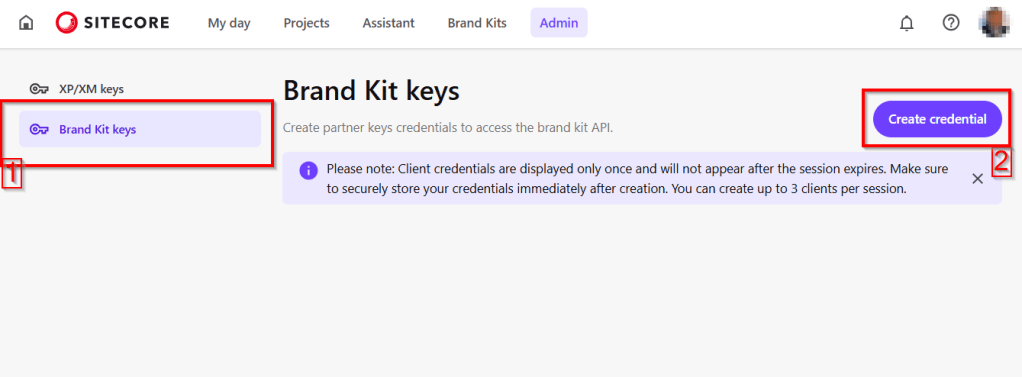

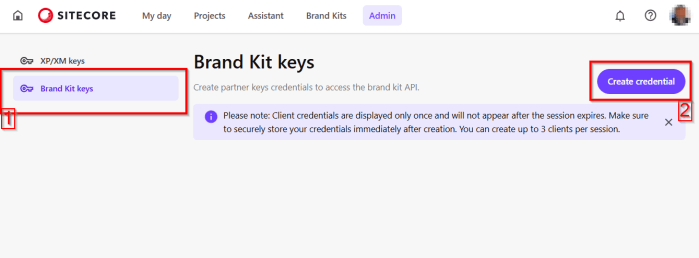

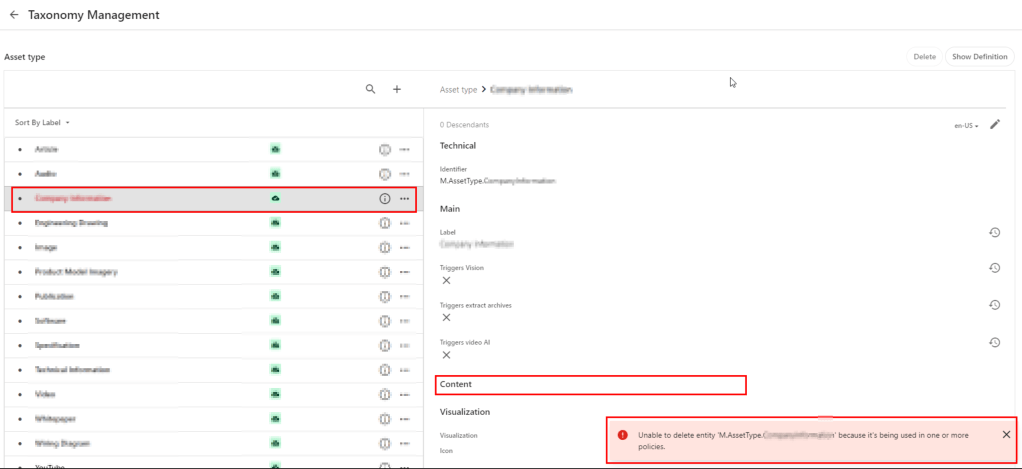

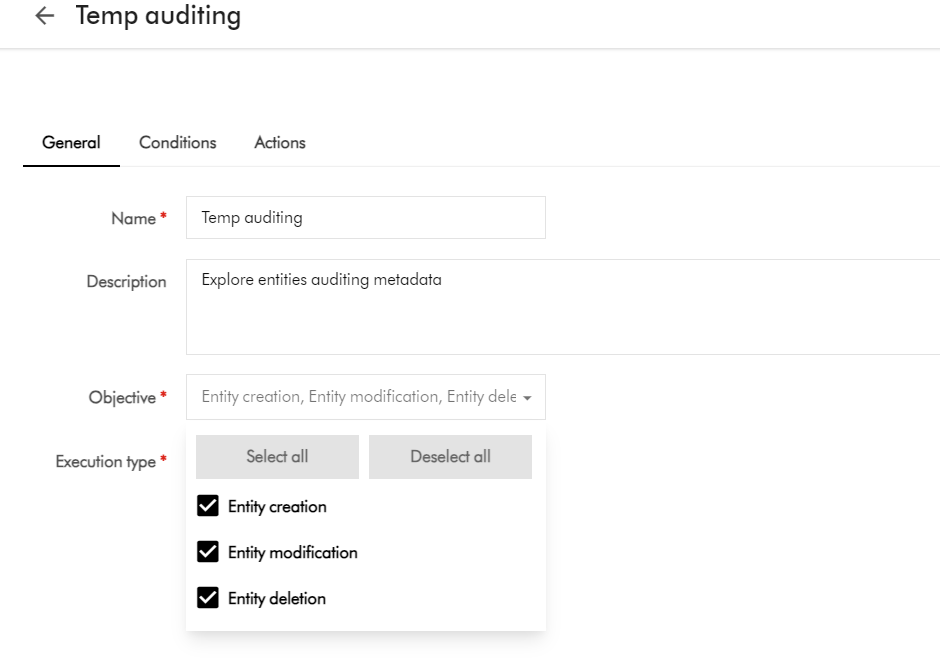

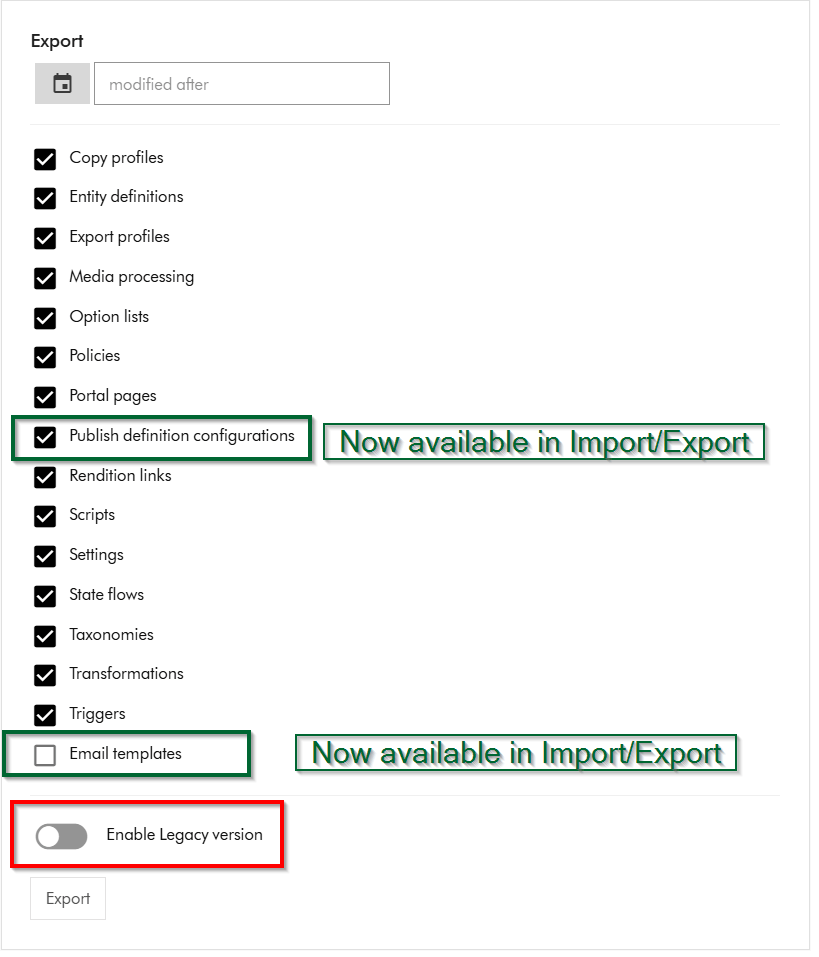

Within Sitecore Content Hub Import/Export UI, you have an option to Export components using both the previous/legacy engine and the new engine. As shown below, you can notice a toggle for Enable Legacy version, which when switched on will allow you to export a package with previous/legacy engine.

Also we can note that Publish definition configurations and Email templates are now available for Import/Export with the new engine. Email templates are unchecked by default.

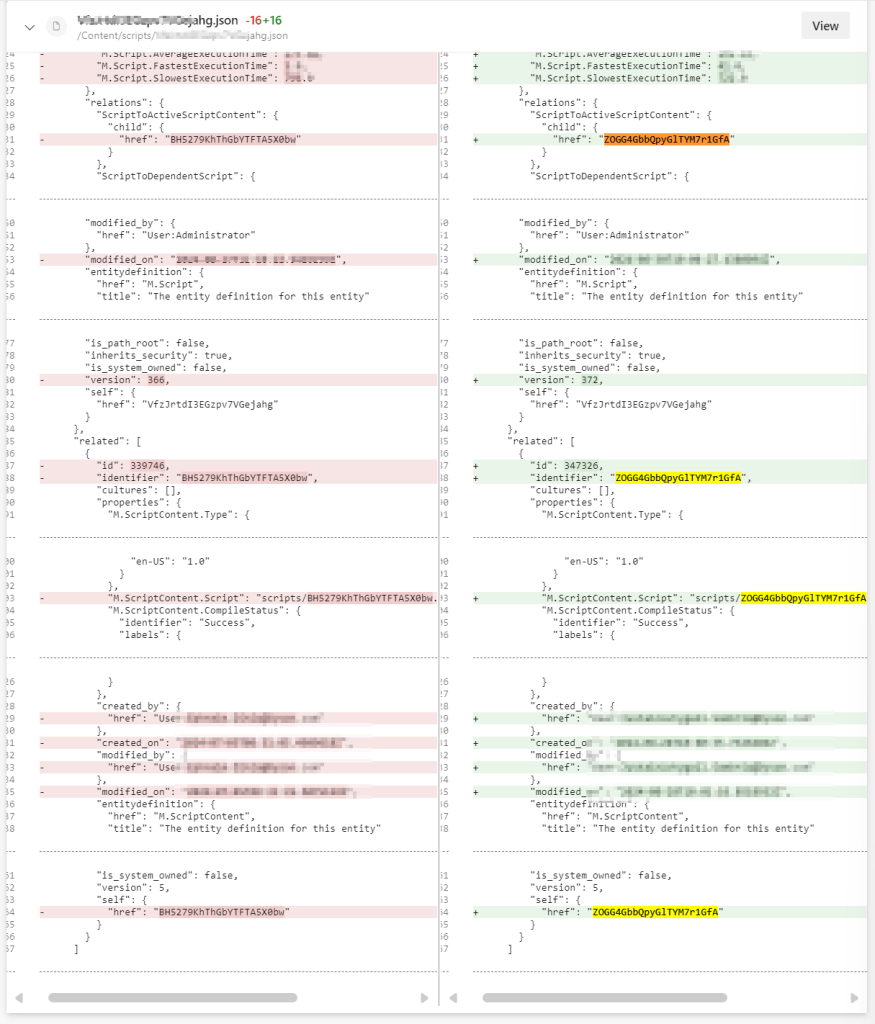

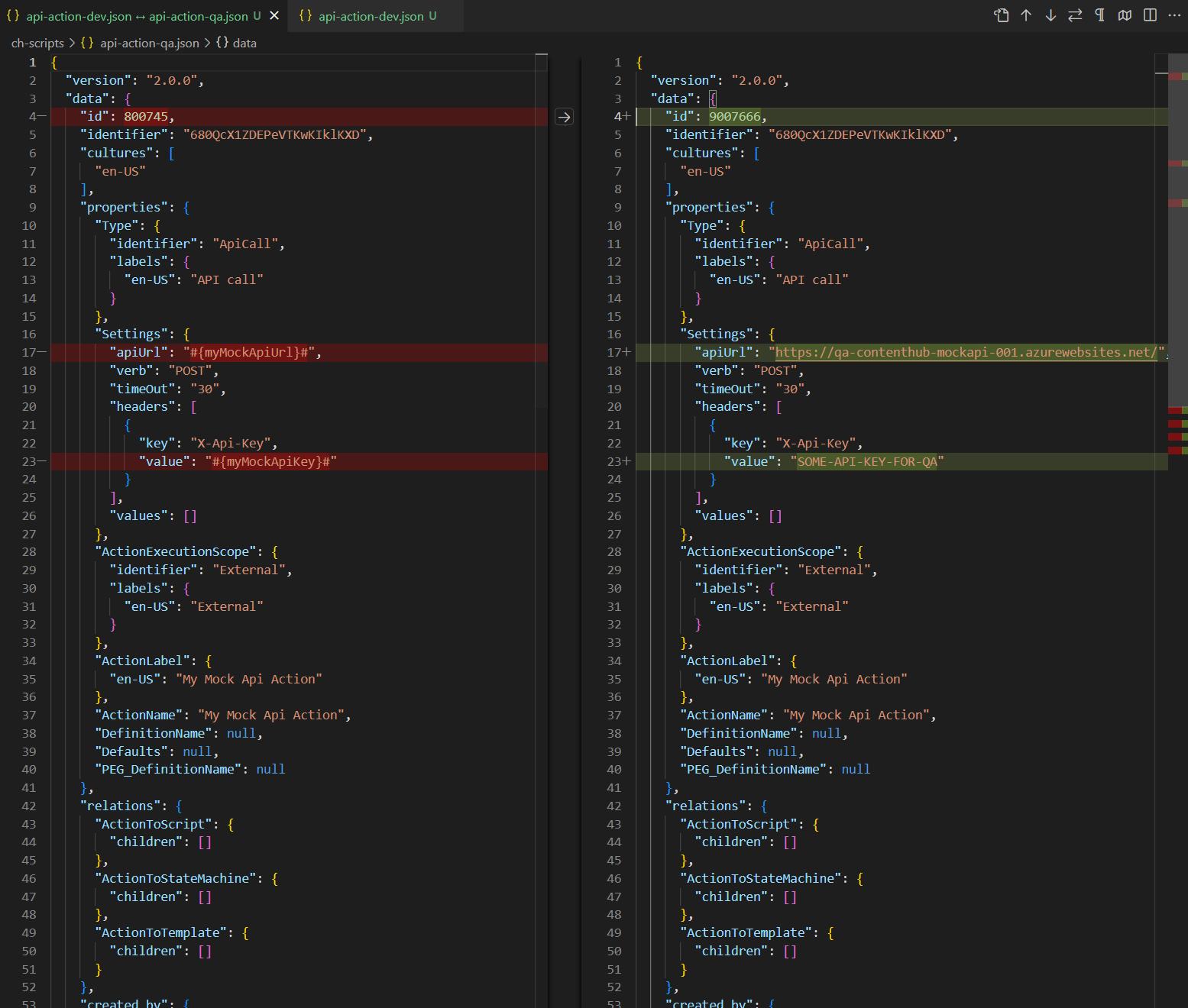

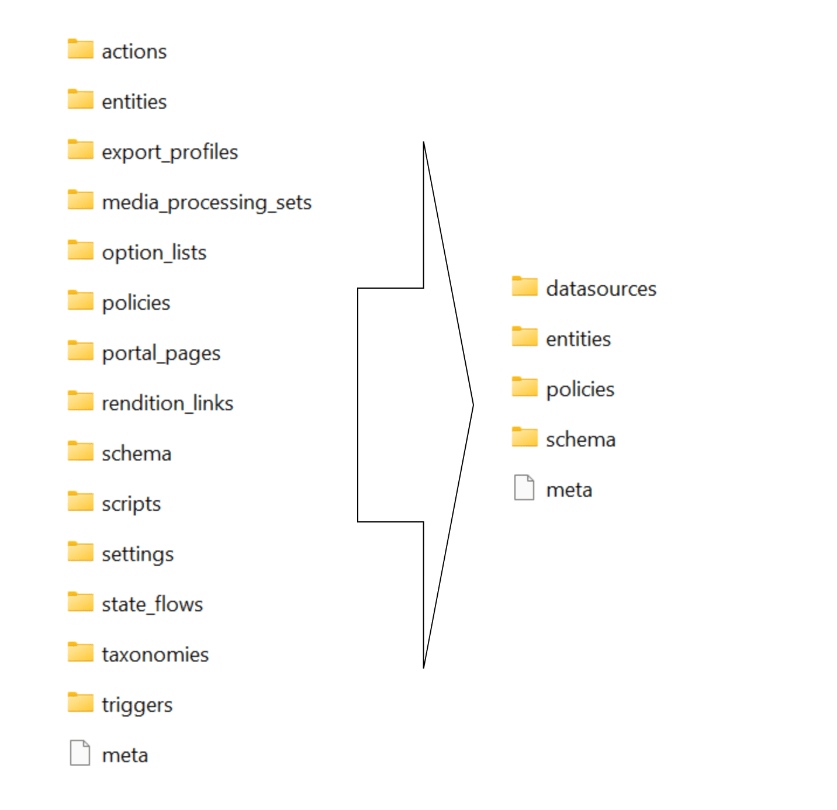

If you did a quick comparison between the export package from the old/legacy engine vs the new engine, it comes clear that Sitecore has updated the packaging structure to organise content by resource type rather than by export category

This change makes navigation more straightforward and ensures greater consistency throughout the package.

Summary of the changes between legacy and new export packages

Below is a graphic showing how the package structure was changed. On the left hand-side, we have the legacy/old package and on the right hand side is the new one.

Full comparison of package contents between old and new

Below is a more detailed comparison, showing how the packages differ.

| Component | Legacy package sub folders | New package sub folders |

| Copy profiles | copy_profiles | entities |

| Email templates | n/a | entities |

| Entity definitions | entities schema option_lists | datasources entities schema |

| Export profiles | export_profiles | entities |

| Media processing | media_processing_sets | entities |

| Option lists | option_lists | datasources |

| Policies | policies | datasources entities policies schema |

| Portal pages | entities portal_pages | datasources entities policies schema |

| Publish definition configurations | n/a | entities |

| Rendition links | rendition_links | entities |

| Settings | settings | entities |

| State flows | state_flows | datasources entities policies schema |

| Taxonomies | taxonomies | datasources entities schema |

| Triggers | actions triggers | entities |

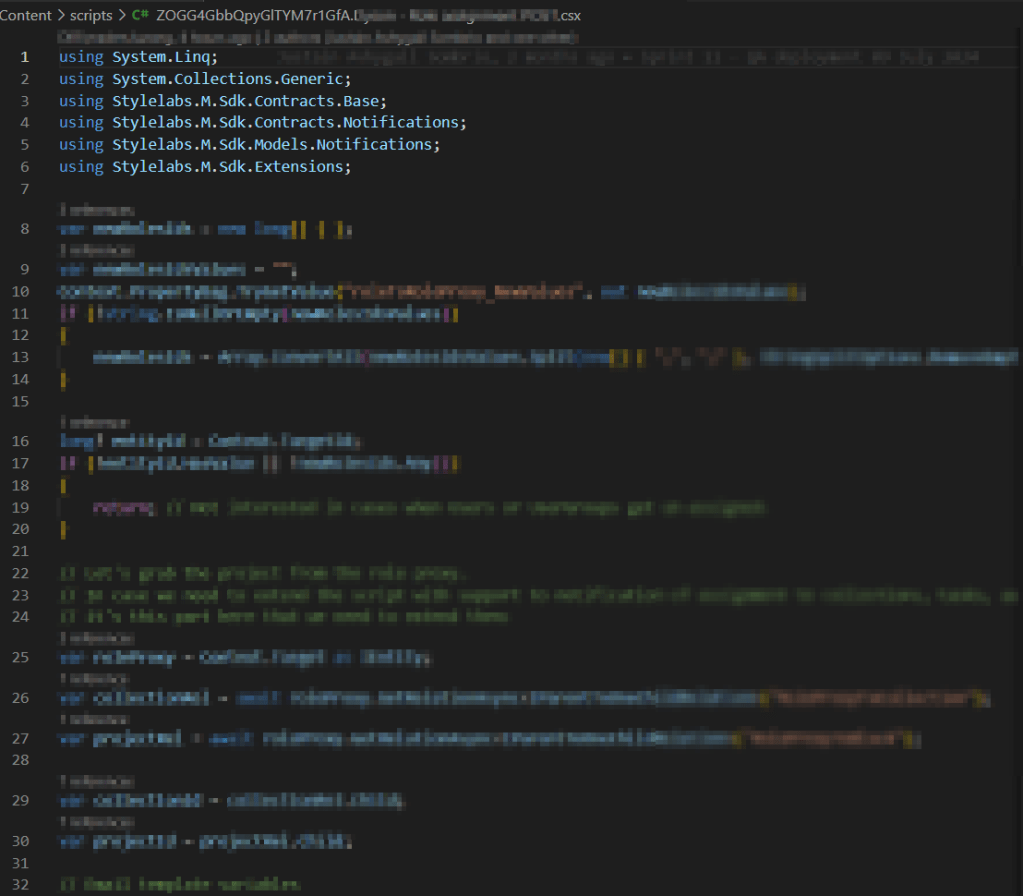

| Scripts | actions scripts | entities |

Resources are grouped by type

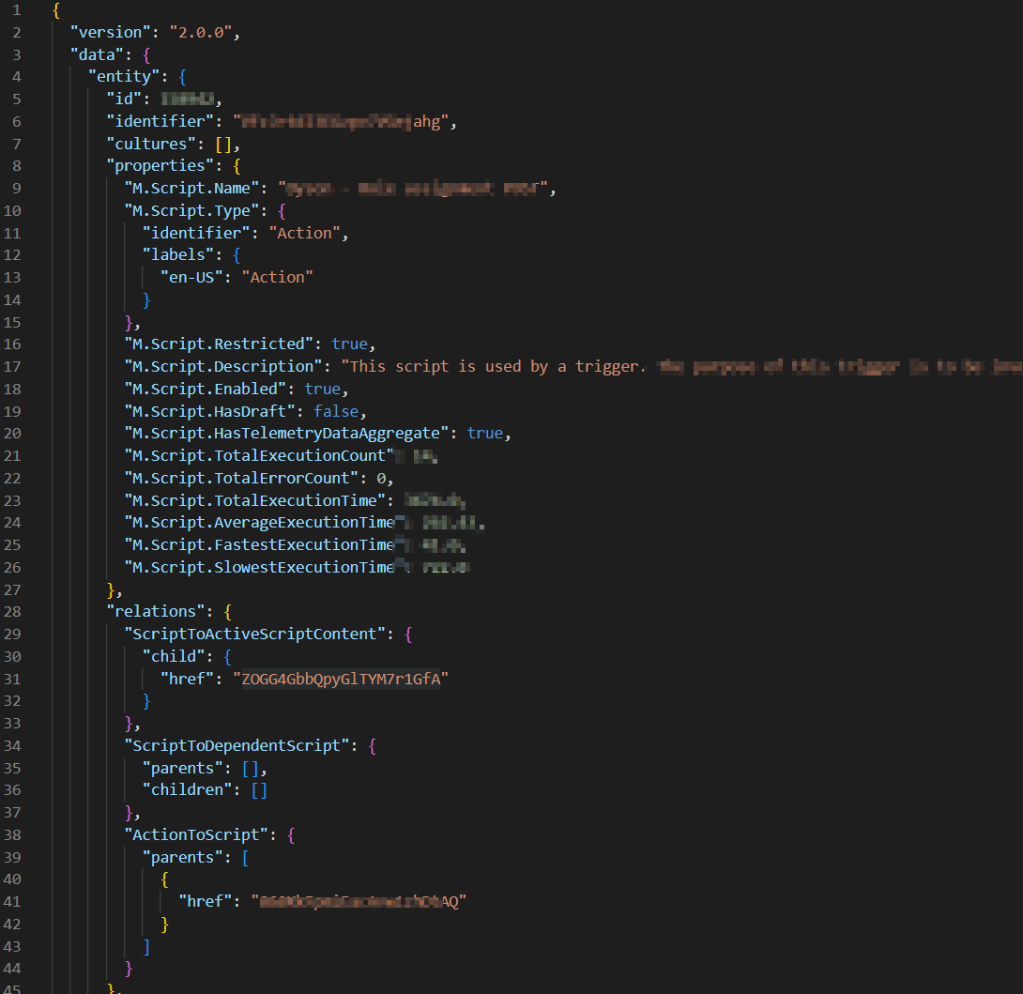

Instead of separate folders like portal_pages, media_processing_sets, or option_lists, the new export engine places files according to their resource type.

For example:

- All entities are stored in the entities/ folder.

- All datasources (such as option lists) are found in datasources/ folder

- Policies and schema files have their own dedicated folders.

Each resource is saved as an individual JSON file named with its unique identifier.

Related components are now separated

When a resource includes related items—such as a portal page referencing multiple components—each component is now saved in its own JSON file.

These files are no longer embedded or nested under the parent resource.

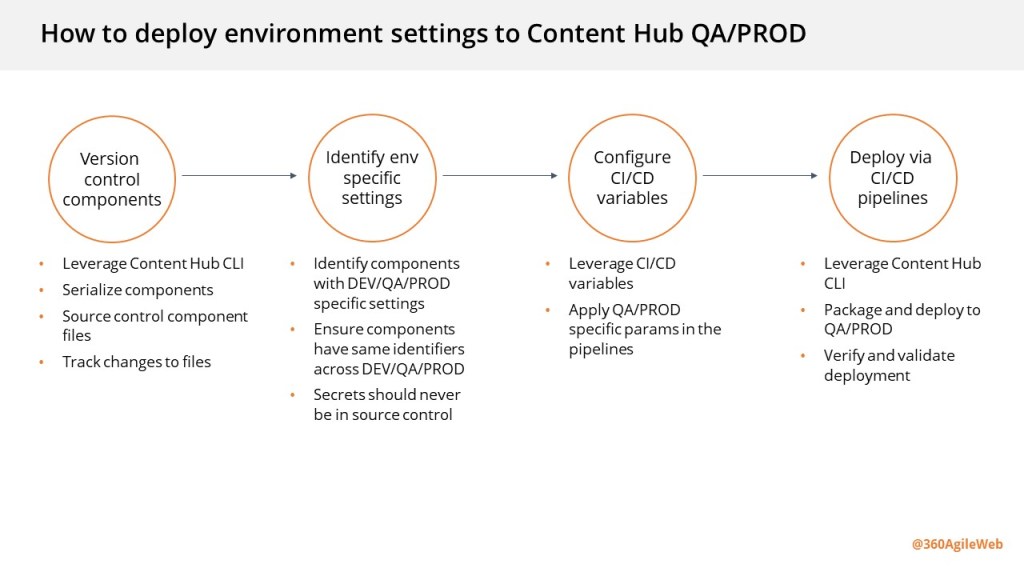

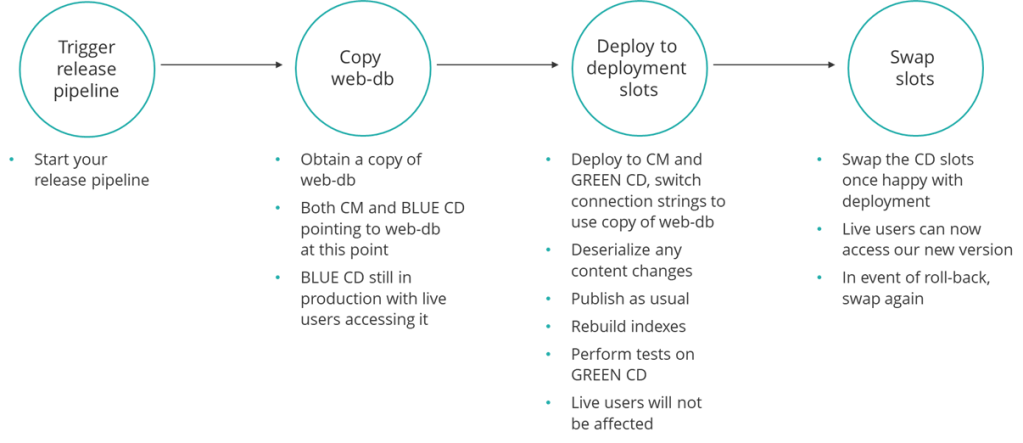

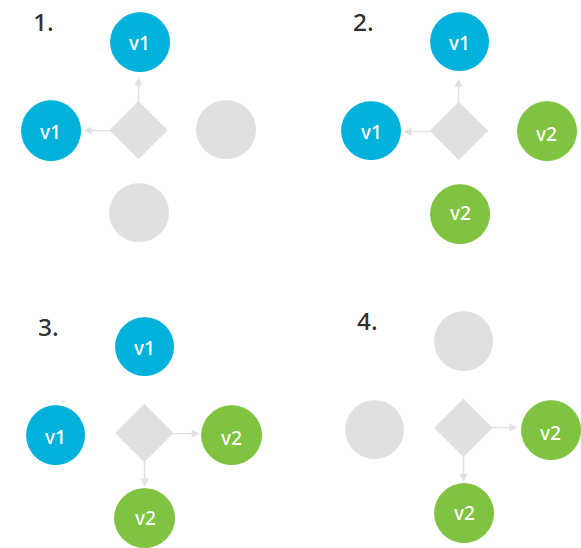

Updating your CICD pipelines

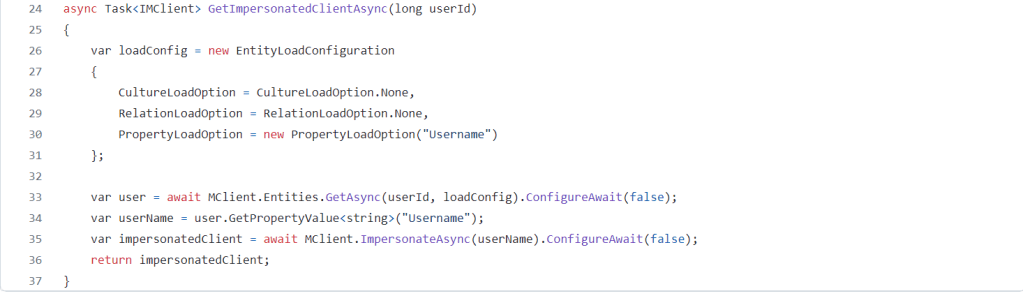

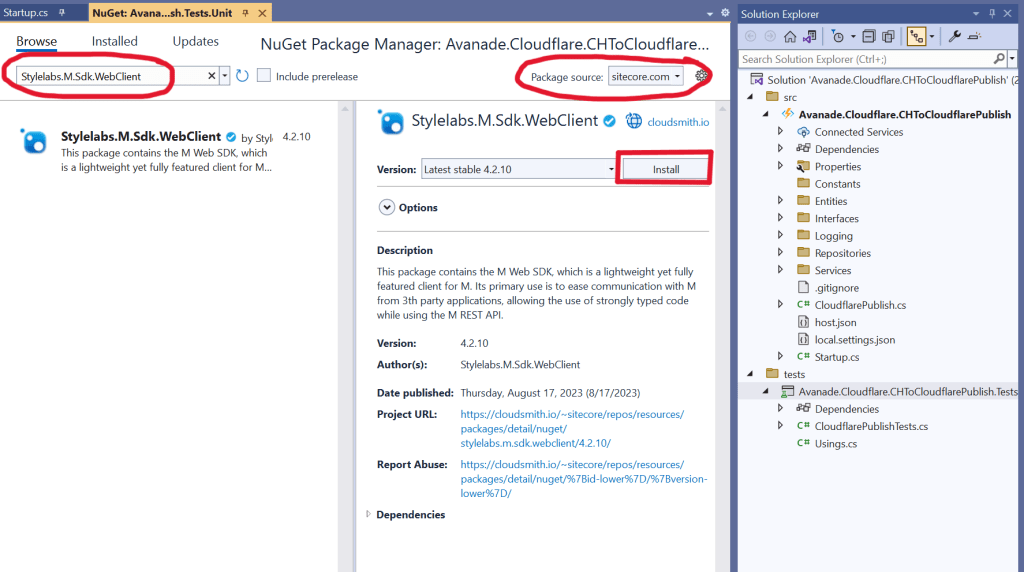

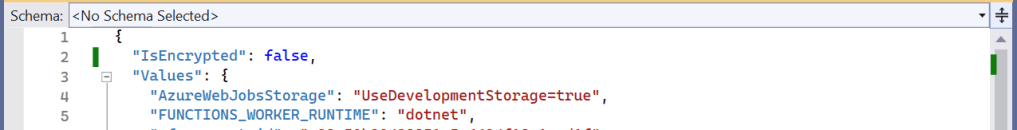

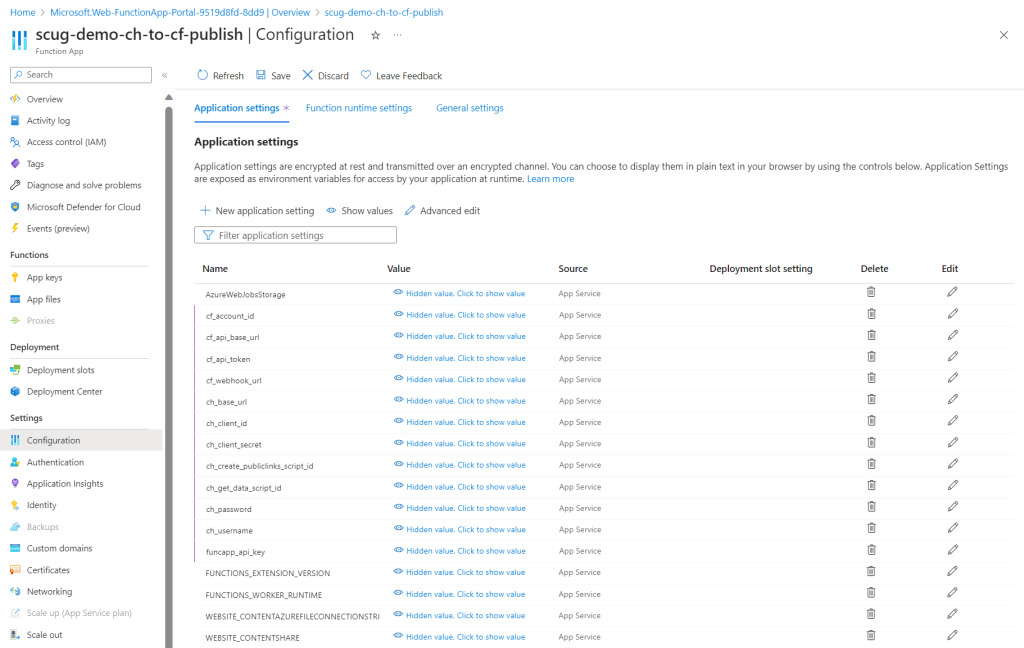

It is very straight forward to update you existing CICD pipelines once we have analysed and understood the new package architecture. You can revisit my previous blog post where I covered this topic in detail You need to simply map your previous logic to work with the new package architecture. You will also need to re-baseline your Content Hub environments within your source control so that you are using the new package architecture.

Next steps

In this blog post, I have looked at the new Content Hub Import/Export engine. I dived into how you can analyse the packages produced from the legacy/old engine and compared it with the new engine. I hope you find this valuable and the analysis provides a view of what has changed in the new package architecture.

Please let me know if you have any comments above and would like me to provide further or additional details.